A Powerbook G4 is barely fast enough to run a large language model

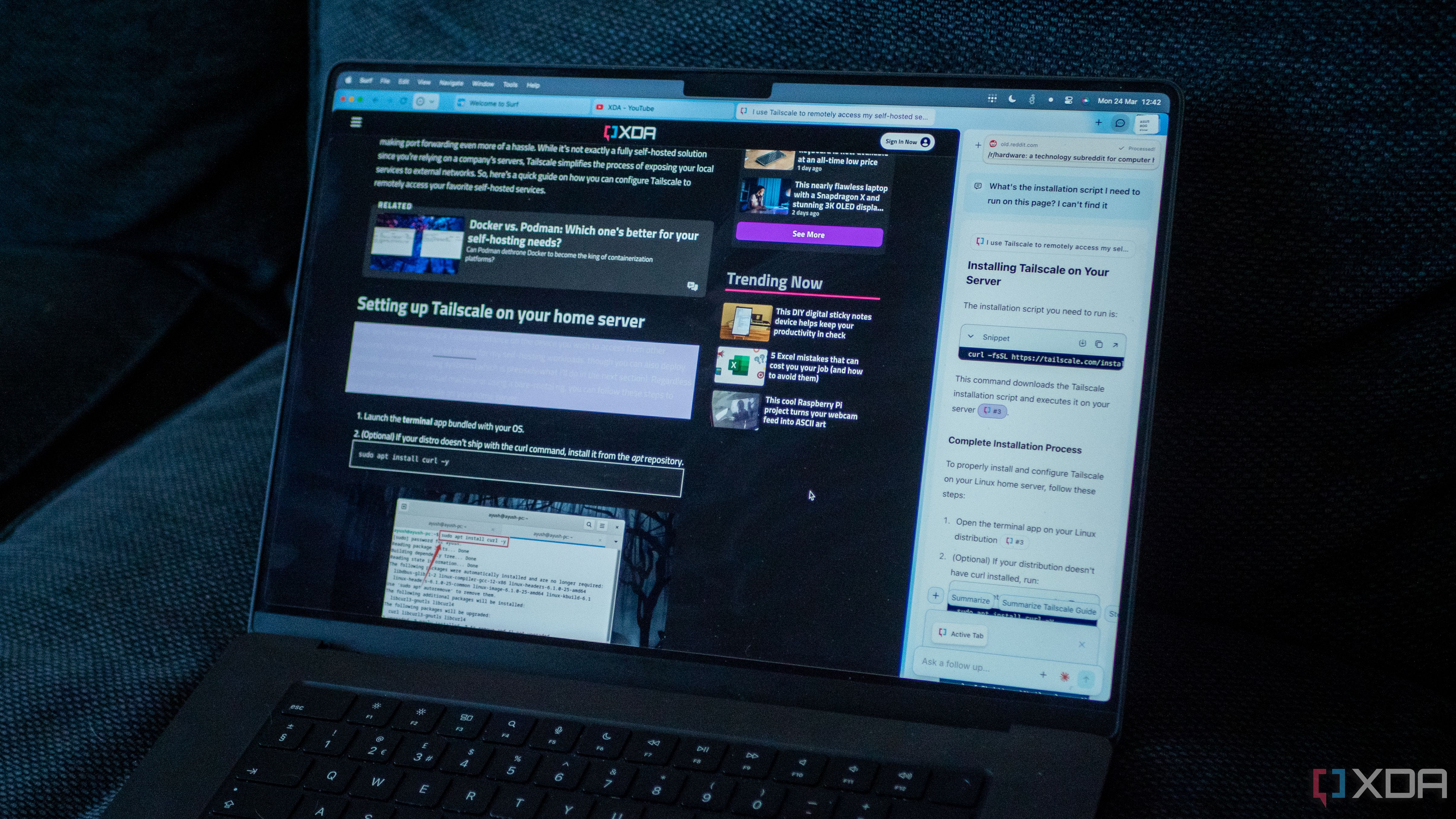

A software developer has proven it is possible to run a modern LLM on old hardware like a 2005 PowerBook G4, albeit nowhere near the speeds expected by consumers.A PowerBook G4 running a TinyStories 110M Llama2 LLM inference — Image credit: Andrew Rossignol/TheResistorNetworkMost artificial intelligence projects, such as the constant push for Apple Intelligence, leans on having a powerful enough device to handle queries locally. This has meant that newer computers and processors, such as the latest A-series chips in the iPhone 16 generation, tend to be used for AI applications, simply due to having enough performance for it to work.In a blog post published on Monday by The Resistor Network, Andrew Rossignol — the brother of Joe Rossignol at MacRumors — writes about his work getting a modern large language model (LLM) to run on older hardware. What was available to him was a 2005 PowerBook G4, equipped with a 1.5GHz processor, a gigabyte of memory, and architecture limitations such as a 32-bit address space. Continue Reading on AppleInsider | Discuss on our Forums

A PowerBook G4 running a TinyStories 110M Llama2 LLM inference — Image credit: Andrew Rossignol/TheResistorNetwork

Most artificial intelligence projects, such as the constant push for Apple Intelligence, leans on having a powerful enough device to handle queries locally. This has meant that newer computers and processors, such as the latest A-series chips in the iPhone 16 generation, tend to be used for AI applications, simply due to having enough performance for it to work.

In a blog post published on Monday by The Resistor Network, Andrew Rossignol — the brother of Joe Rossignol at MacRumors — writes about his work getting a modern large language model (LLM) to run on older hardware. What was available to him was a 2005 PowerBook G4, equipped with a 1.5GHz processor, a gigabyte of memory, and architecture limitations such as a 32-bit address space.

Continue Reading on AppleInsider | Discuss on our Forums

![Nothing Phone (3a) update uses your camera to create reminders, notes, and more [Video]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/03/nothing-phone-3a-essential-space-1.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)