Google Messages starts rolling out sensitive content warnings for nude images

Google Messages has started rolling out sensitive content warnings for nudity after first unveiling the feature late last year. The new feature will perform two key actions if the AI-based system detects message containing a nude image: it will blur any of those photo and trigger a warning if your child tries to open, send or forward them. Finally, it will provide resources for you and your child to get help. All detection happens on the device to ensure images and data remain private. Sensitive content warnings are enabled by default for supervised users and signed-in unsupervised teens, the company notes. Parents control the feature for supervised users via the Family Link app, but unsupervised teens aged 13 to 17 can turn it off in Google Messages settings. The feature is off by default for everyone else. With sensitive content warnings enabled, images are blurred and a "speed bump" prompt opens allowing the user to block the sender, while offering a link to a resource page detailing why nudes can be harmful. Next, it asks the user if they still want to open the message with "No, don't view," and "Yes, view" options. If an attempt is made to send an image, it provides similar options. So, it doesn't completely block children from sending nudes, but merely provides a warning. The feature is powered by Google's SafetyCore system which allows AI-powered on-device content classification without sending "identifiable data or any of the classified content or results to Google servers," according to the company. It only just started arriving on Android devices and is not yet widely available, 9to5Google wrote. This article originally appeared on Engadget at https://www.engadget.com/apps/google-messages-starts-rolling-out-sensitive-content-warnings-for-nude-images-130525437.html?src=rss

Google Messages has started rolling out sensitive content warnings for nudity after first unveiling the feature late last year. The new feature will perform two key actions if the AI-based system detects message containing a nude image: it will blur any of those photo and trigger a warning if your child tries to open, send or forward them. Finally, it will provide resources for you and your child to get help. All detection happens on the device to ensure images and data remain private.

Sensitive content warnings are enabled by default for supervised users and signed-in unsupervised teens, the company notes. Parents control the feature for supervised users via the Family Link app, but unsupervised teens aged 13 to 17 can turn it off in Google Messages settings. The feature is off by default for everyone else.

With sensitive content warnings enabled, images are blurred and a "speed bump" prompt opens allowing the user to block the sender, while offering a link to a resource page detailing why nudes can be harmful. Next, it asks the user if they still want to open the message with "No, don't view," and "Yes, view" options. If an attempt is made to send an image, it provides similar options. So, it doesn't completely block children from sending nudes, but merely provides a warning.

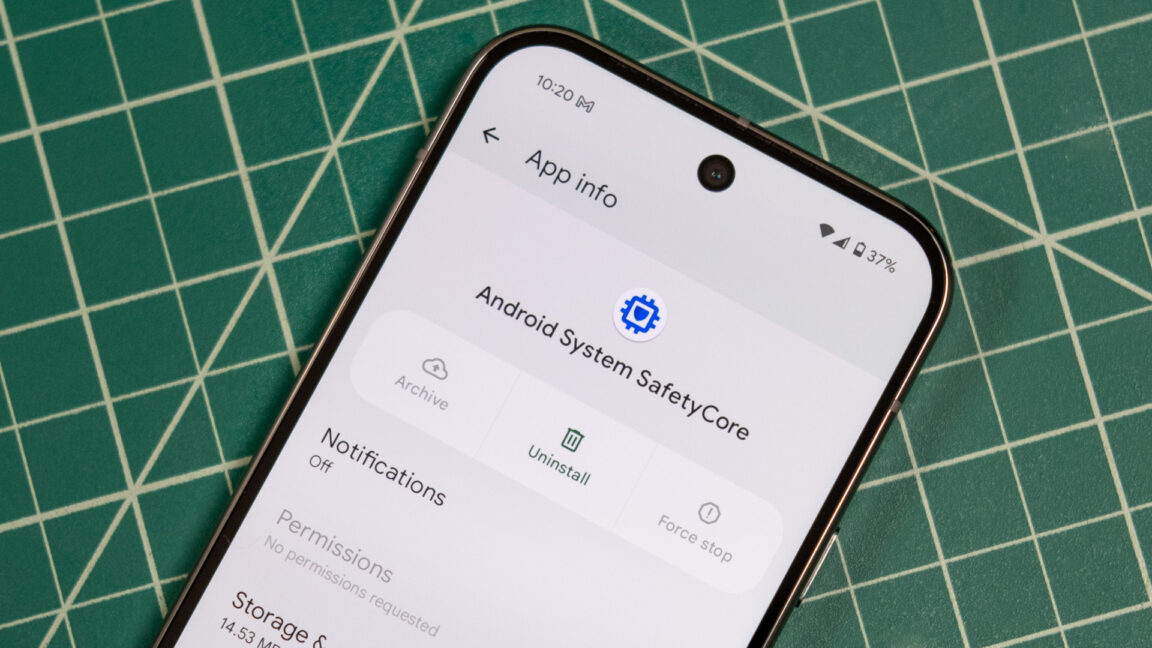

The feature is powered by Google's SafetyCore system which allows AI-powered on-device content classification without sending "identifiable data or any of the classified content or results to Google servers," according to the company. It only just started arriving on Android devices and is not yet widely available, 9to5Google wrote. This article originally appeared on Engadget at https://www.engadget.com/apps/google-messages-starts-rolling-out-sensitive-content-warnings-for-nude-images-130525437.html?src=rss

.jpg)