Everyone wants the viral AI doll – but it’s a privacy nightmare waiting to happen

Millions have already given away their face and sensitive data to jump on the latest viral AI trend – and that's bad for your privacy and security.

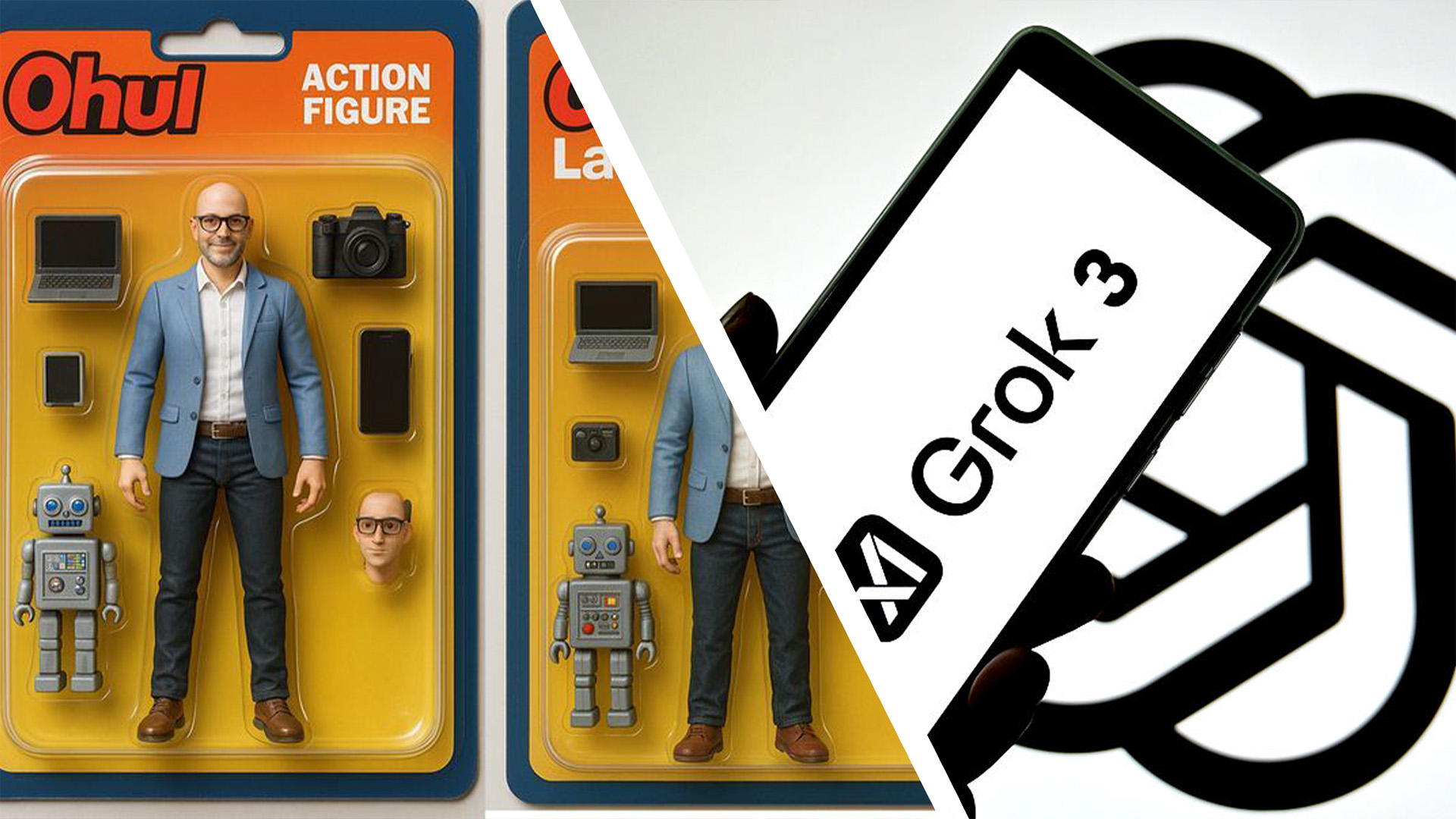

Right after the Ghibli-style AI image trend began to wear off, ChatGPT and similar tools found a new way to encourage people to upload their selfies into their system—this time, to make an action figure version of themselves.

The drill is always the same. A photo and a few prompts are enough for the AI image generator to turn you into a packaged Barbie-style doll with accessories linked to your job or interests right next to you. The last step? Sharing the results on your social media account, of course.

I must admit that the more I scroll through feeds filled with AI doll pictures, the more concerned I become. This is not only because it's yet another trend misusing the power of AI. Millions of people have agreed to willingly share their faces and sensitive information simply to jump on the umpteenth social bandwagon, most likely without thinking about the privacy and security risks that come with it.

A privacy deceit

Let's start with the obvious – privacy.

Both the AI doll and Studio Ghbli AI trend have pushed more people to feed the database of OpenAI, Grok, and similar tools with their pictures. Many of these had perhaps never used LLM software before. I certainly saw too many families uploading their kids' faces to get the latest viral image over the past couple of weeks.

That's true; AI models are known to scrape the web for information and images. So, many have probably thought, how different is it from sharing a selfie on my Instagram page?

There's a catch, though. By voluntarily uploading your photos with AI generator software, you give the provider more ways to legally use that information—or, better yet, your face.